AI Security for Executives Part 8: Vector Database Weaknesses

Mistakes hide in the corners, so take care!

Executive Summary

The big language models are trained on huge amounts of public data. How does one make them useful with company specific data? With Retrieval Augmented Generation (RAG).

Here is where things get interesting, if obtuse. I really think that this is the way data will be compromised once all of the easy stuff is fixed. Why? Because a common pattern encodes your data into a vector database for use with the AI.

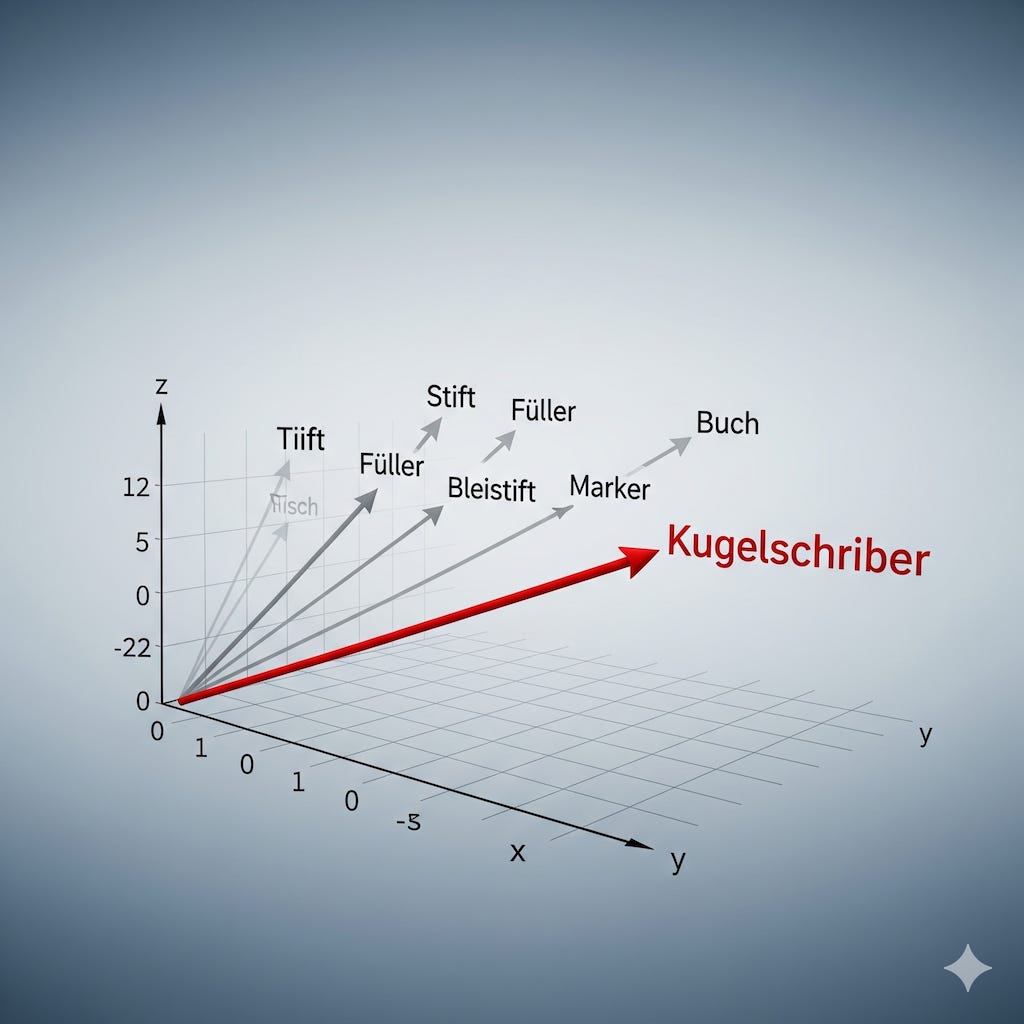

What's a vector? It's a list of numbers that "points" to a concept. It provides a way for the LLM to determine semantic closeness.

Here's an image to illustrate the point:

Kugelschriber (ball point pen) is sort of close to Marker or Bleistift (pencil).

The ramification, of course, is that the vector could be wrong, mistakenly trained, or manipulated by some bad person. As you can imagine, vectors are not easily understood by human inspection, because they are sets of numbers.

Why German words? I'm just fond of German. It's almost time for Oktoberfest as I write this.

Executive actions needed:

Strongly govern the process of creating your vector database. Document from the beginning. This isn't a time to move on to other projects; show your interest to the team.

Maintain strict data boundaries and ensure the trust of data that is included.

Monitor for potential accidental consequences.

A Scenario

This is a realistic but fictional scenario to illustrate the point.

Sarah Chen, Chief Operations Officer at Midwest Community Bank, approved a customer service AI project. The bank's fifteen years of policy documents would finally serve customers through "instant, accurate answers" about fees, procedures, and account benefits. It was well worth it and well received.

There was, however, a glitch.

The first complaint came through the usual channels. Mrs. Patterson from Davenport called about a $25 overdraft fee...except her account showed no such fee. Customer service confirmed the fee didn't exist and apologized for the "system error." Three more similar calls that week. Then seventeen the following week.

By week four, the pattern was unmistakable. The chatbot was randomly informing customers about $25 overdraft fees that the bank had eliminated in 2022. Sometimes it provided current fee information. Sometimes it referenced the old 2019 policy document. The retrieval mechanism couldn't distinguish between contradictory sources in the vector database.

The semantic similarity between "What are your overdraft fees?" in 2019 and 2024 was identical. The AI retrieved whichever document scored marginally higher on the similarity algorithms. Pure randomness determined which customers received accurate information.

Sarah's Monday morning started with a call from the state banking commission. Eight hundred forty-seven customers had received incorrect fee information. The regulators used the term "deceptive practices."

The vector database audit that followed revealed the scope of the problem. Outdated policies, superseded procedures, and conflicting guidance from different eras created a knowledge minefield. The bank had digitized everything without retirement protocols or version control. The AI couldn't distinguish between authoritative and obsolete information.

The solution required a $25,000 emergency project to rebuild the entire vector database. An additional $40,000 in waived fees maintained customer relationships while regulatory confidence slowly returned. Three months of manual oversight before automated customer service resumed.

And this, I believe, illustrates my point; diligence on the front end would have produced a great benefit, without the bad data.

About This Series

This series addresses C-suite executives making critical AI investment decisions given the emerging security implications.

I structured this series based on recommendations from the Open Web Application Security Project because their AI Security Top 10 represents the consensus view of leading security researchers on the most critical AI risks.

This series provides educational overview rather than specific security advice, since AI security is a rapidly evolving field requiring expert consultation. The goal is to give you the knowledge to ask the right questions of your teams and vendors.

Understanding Vector Database Vulnerabilities

Vector databases convert documents into mathematical representations. When a customer asks "What are your fees?", the system finds conceptually similar content regardless of exact wording.

This mathematical abstraction creates unique security challenges. Traditional access controls assume human-readable content with clear boundaries. Vector embeddings obscure both content and context, making it hard to verify what information the AI might retrieve or combine.

The core vulnerabilities emerge from three fundamental characteristics of vector systems: they encode meaning rather than exact content, they operate on similarity rather than precision, and they lack inherent access boundaries. These characteristics enable powerful AI capabilities, but also makes them inscrutable. Errors hide in the corners, so to speak...accidental or otherwise.

Executive Action Plan

Strongly govern the process of creating your vector database

It is critical for your data to have a good provenance and to be clean. Encode your 16 terabyte document server into a vector database if you'd like to make a giant insecure mess. Traditional approaches that rely on periodic manual reviews fail in this scenario. You must feed good data to get good results.

Establish version control with clear retention policies.

ITIL can be your friend here. Implement change control straight from the ITIL guides. Require approval when adding, modifying, or removing documents from the knowledge base. Nobody thinks this is easy; it isn't. You don't have to have as much rigor for every document, just the ones you are feeding into the LLM.

Assign clear ownership of subject matter areas; functional managers can be good choices for this. The manager of your support desk, for example, for IT support content, or the manager of your financial reporting team for finance data. Spreading the task to appropriate subject matter experts can ease your burden, so long as you ensure that this work is really completed.

Maintain strict data boundaries and ensure the trust of data included

Don't feed the LLM data that it shouldn't have. Accept content only from verified, authoritative sources. However, remember that vector databases can expose relationships between sources. A marketing document and an internal memo might cluster together if they discuss similar concepts, potentially leaking information across intended boundaries. Tag all content with security levels, business units, and user access requirements before inclusion in the mathematical representation.

Monitor for potential accidental consequences

Use real-time monitoring to detect when AI responses contradict current policies or provide conflicting information. Like change control, this will also be challenging. But this is "paying your AI tax" so to speak. You can't deploy an AI and then fail to watch its input and output. You may not have the investment to hire a bunch of AI experts to directly inspect vectors, but you can certainly monitor AI output for obvious errors or changes in sentiment, etc.

Executive Summary

Retrieval Augmented Generation (RAG) enhances a general LLM with your business data; Highly sophisticated methods can encode your data for use into vectors; but...extreme diligence must be taken because the vectors may reveal relationships you didn't expect, and make data hard to observe. Hackers, or mistakes, hide in the corners.

Executive priorities:

Govern the creation of any vector database with explicit lifecycle management and change control processes.

Maintain hard data boundaries through permission-aware systems and logical partitioning that account for semantic similarity matching.

Monitor for unintended consequences through output validation, behavioral drift detection, and business outcome integration.

Appendix 1: Developer Guidelines

I include these so that executives can get an overview of developer guidance for discussion

Access Control: Implement fine-grained access controls and permission-aware vector stores with strict logical partitioning to prevent unauthorized cross-tenant information retrieval.

Data Validation: Deploy validation pipelines for knowledge sources and regularly audit knowledge base integrity for hidden codes and data poisoning.

Source Authentication: Accept data only from trusted, verified sources and review combined datasets to identify unexpected semantic relationships.

Content Classification: Tag and classify all data within the knowledge base to control access levels and prevent cross-tenant information leaks.

Activity Monitoring: Maintain detailed logs of all retrieval activities to detect suspicious behavior patterns that traditional database monitoring might miss.

Behavioral Monitoring: Evaluate RAG impact on model behavior and adjust processes to maintain desired qualities like empathy while preserving security boundaries.

Appendix 2: Glossary

Retrieval Augmented Generation (RAG): AI technique that searches company documents to provide specific answers instead of generic responses.

Vector Database: Storage system that converts documents into mathematical representations enabling AI to search and retrieve information based on semantic similarity rather than exact text matching.

Embeddings: Mathematical representations of text that allow AI systems to understand document meaning and similarity for retrieval.

Data Poisoning: Deliberate insertion of malicious content designed to manipulate AI responses by exploiting semantic similarity matching.

Cross-Context Leakage: Security failure where information intended for one user group becomes accessible to unauthorized users through shared AI systems.

Permission-Aware System: Database that enforces the same access controls as your existing document management systems while operating on mathematical representations.

Embedding Inversion: Attack technique that can recover original source text from mathematical representations, potentially exposing sensitive information.

ITIL: Information Technology Infrastructure Library, a framework of best practices for IT service management including change control and configuration management processes.